Table of Contents

- Removal of Contaminants by Passive Treatment Systems

- Evaluation of Treatment System Performance

- Dilution Adjustments

- Loading Limitations

- Study Sites

- Effects of Treatment Systems on Contaminant Concentrations

- Dilution Factors

- Removal of Metals from Alkaline Mine Water

- Removal of Metals and Acidity from Acid Mine Drainage

- Design and Sizing of Passive Treatment Systems

- Characterization of Mine Drainage Discharges

- Calculations of Contaminant Loadings

- Classification of Discharges

- Passive Treatment of Net Alkaline Water

- Passive Treatment of Net Acid Water

- Pretreatment of Acidic Water With ALD

- Treating Mine Water With Compost Wetland

- Operation and Maintenance

Removal of Contaminants by Passive Treatment Systems

Chapter 2 described chemical and biological processes that decrease concentrations of mine water contaminants in aquatic environments. The successful utilization of these processes in a mine water treatment system depends, however, on their kinetics. Chemical treatment systems function by creating chemical environments where metal removal processes are very rapid. The rates of chemical and biological processes that underlie passive systems are often slower than their chemical system counterparts and thus require that mine water be retained longer before it can be discharged. Retention time is gained by building large systems such as wetlands. Because the land area available for wetlands on minesites is often limited, the sizing of passive treatment systems is a crucial aspect of their design. Unfortunately, in the past, most passive treatment systems have been sized based on guidelines that ignored water chemistry or on available space, rather than on comparisons of contaminant production by the mine water discharge and expected contaminant removal by the treatment system. Given the absence of quantitative sizing standards, wetlands have been constructed that are both vastly undersized and oversized.

In this chapter, rates of contaminated removal are described for 13 passive treatment systems in western Pennsylvania. The systems were selected to represent the wide diversity of mine water chemical compositions that exist in the eastern United States. The rates that are reported from these sites are the basis of treatment system sizing criteria suggested in chapter 4.

The analytical approach used to quantify the performance of passive treatment systems in this chapter differs from the approach used by other researchers in several respects. First, contaminant removal is evaluated from a rate perspective, not a concentration perspective. Second, changes in contaminant concentrations are partitioned into two components: because of dilution from inputs of freshwater, and because of chemical and biological processes in the wetland. In the evaluations of wetland performance, only the chemical and biological components are considered. Third, treatment systems, or portions of systems, were included in the case studies only if contaminant concentrations were high enough to ensure that contaminant removal rates were not limited by the absence of the contaminant. These unique aspects of the research are discussed in further detail below.

Evaluation of Treatment System Performance

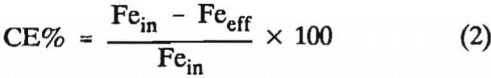

To make reliable evaluations of wetland performance, a measure should be used that allows comparison of contaminant removal between systems that vary in size and the chemical composition and flow rate of mine water they receive. In the past, concentration efficiency (CE%) has been a common measure of performance. Using iron concentration as an example, the calculation is

where the subscripts “in” and “eff’ represent wetland influent and effluent sampling stations and Fe concentrations are in milligram per liter.

Except in carefully controlled environments, CE% is a very poor measure of wetland performance. The efficiency calculation results in the same measure of performance for a system that lowers Fe concentrations from 300 to 100 mg·L-¹ as one that lowers concentrations from 3 to 1 mg·L-¹. Neither the flow rate of the drainage nor the size of the treatment system are incorporated into the calculation. As a result, the performances of systems have been compared without accounting for differences in flow rate (which vary from <10 to >1000 mg·L-¹) or for differences in system size (which vary from <0.1 to >10 ha).

A more appropriate method for measuring the performance of treatment systems calculates contaminant removal from a loading perspective. The daily load of contaminant received by a wetland is calculated from the product of concentration and flow rate data. For Fe, the calculation is

where g·d-¹ is gram per day and 1.44 is the unit conversion factor needed to convert minutes to days and milligrams to grams.

The contaminant load is apportioned to the down flow treatment system by dividing by a measure of the system’s size. In this study, treatment systems are sized based on their surface area (SA) measured in square meter,

![]()

The daily mass of Fe removed by the wetland between two sampling stations, Fe(g·d-¹)rem, is calculated by comparing contaminant loadings at the two points,

![]()

An area-adjusted daily Fe removal rate is then calculated by dividing the load removed by the surface area of the treatment system lying between the sampling points,

![]()

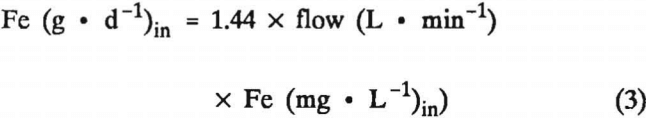

To illustrate the use of contaminant loading and contaminant removal calculations, consider the hypothetical water quality data presented in table 9.

In systems A and B, changes in Fe concentrations are the same (60 mg·L-¹), but because system B receives four times more flow and thus higher Fe loading, it actually removes four times more Fe from the water. The concentration efficiencies of the two wetlands are equivalent, but the masses of Fe removed are quite different.

Data are shown for system C for three sampling dates on which flow rates and influent iron concentrations vary. On the first date (C1), the wetland removes all of the Fe that it receives. On the next two dates (C2 and C3), Fe loadings are higher and the wetland effluent contains Fe. From an efficiency standpoint, performance is best on the first date and is worst on the third date. From an Fe- removal perspective, the system is removing the least amount of Fe on the first date. On the second and third dates, the wetland removes similar amounts of iron (2,880 and 3,024 g·d-¹). Variation in effluent chemistry results, not from changes in wetland’s Fe-removal performance, but from variation in influent Fe loading.

Lastly, consider a comparison of wetland systems of different sizes. System D removes more iron than any wetland considered (5,400 g·d-¹), but it is also larger. One would expect that, all other factors being equal, the largest wetland would remove the most Fe. When wetland area is incorporated into the measure by calculating area- adjusted Fe removal rates (gram per square meter per day), System B emerges as the most efficient wetland considered.

Dilution Adjustments

Contaminant concentrations decrease as water flows through treatment systems because chemical and biological processes remove contaminants from solution and because the concentrations are diluted by inputs of freshwater. To recognize and quantify the removal of contaminants by biological and chemical processes in passive treatment systems, it is necessary to remove the effects of dilution. Ideally, studies of treatment systems include the development of detailed hydrologic and chemical budgets so that dilution effects are readily apparent. In practice, the hydrologic information needed to develop these budgets is rarely available, except when systems are built for research purposes. Treatment systems constructed by mining companies and reclamation groups are rarely designed to facilitate flow measurements at all water sampling locations, so estimating dilution from hydrologic information is highly inaccurate or impossible.

An alternative method for distinguishing the effects of dilution from those of chemical and biological processes is through the use of a conservative ion. By definition, the concentration of a conservative ion changes between two sampling points only because of dilution or evaporation. Changes in concentrations of contaminant ions that proportionately exceed those of conservative ions can then be attributed to biological and chemical wetland processes.

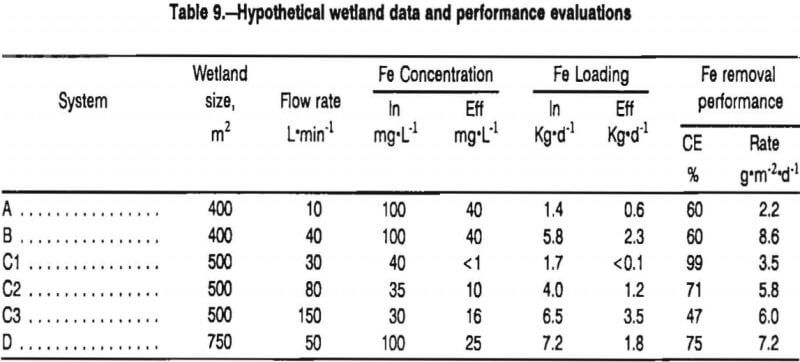

In this study, Mg was used as a conservative ion. Magnesium was considered a good indicator of dilution in these systems for both theoretical and empirical reasons.

In northern Appalachia, concentrations of Mg in coal mine drainage are often >50 mg·L-¹, while concentrations in rainfall are <1 mg-L”1 and in surface runoff are usually <5 mg·L-¹. Magnesium is unlikely to precipitate in passive treatment systems because the potential solid precipitates, MgSO4, MgCO3, and CaMg(CO3)2, do not form at the concentrations and pH conditions found in the systems. While biological and soil processes exist that may remove Mg in wetlands, their significance is negligible relative to the high Mg loadings that most mine water treatment systems in northern Appalachia receive. The average Mg loading for wetland systems included in this study was -7,000 g Mg·m-²yr-¹. The uptake of dissolved Mg by plants in constructed wetlands can only account for 5 to 10 g Mg·m-²yr-¹. This estimate assumes that the net primary productivity of the constructed wetlands is 2,000 g·m-²yr-¹ dry weight and that the Mg content of this biomass is 0.25% to 0.50%. The estimate ignores mineralization processes that would decrease the net retention of Mg to lower values. Most constructed wetlands have a clay base that can adsorb Mg by cation exchange processes, but the total removal of Mg by this process is limited to about 100 g·m-². This estimate assumes that the mine water is in contact with a 5-cm-deep clay substrate that has a density of 1.5 g·cm-³, a cation exchange capacity of 25 meq per 100 g, and 50% of the available sites are occupied by Mg. These conservative calculations indicate that less than 2% of the annual Mg loading at the study sites is likely affected by biological and soil processes within the systems.

Empirical data also indicate that Mg is conservative in the wetlands monitored in this study. Table 10 shows influent and effluent concentrations of major noncontaminant ions at eight constructed wetlands. No precipitation had occurred in the study area for 2 weeks previous to collection of the samples, so dilution from rainfall, surface water, or shallow ground water seeps was minimal. Magnesium was the most conservative ion measured. Concentrations of Mg changed by <5% with flow through every wetland, while concentrations of all other ions monitored changed by at least 15% at at least one site.

Changes in concentrations of Mg were used to adjust for dilution effects by the following method. For each set of water samples from a constructed wetland, a dilution factor (DF) was calculated from changes in concentrations of Mg between the influent and effluent station:

DF = Mgeff/Mgin……………………………………………………………………………(7)

Contaminant concentrations were adjusted to account for dilution using the DF. When only an influent flow rate was available, the chemical composition of the effluent water sample was adjusted. For Fe, the adjustment calculation was

ΔFeDA = Fein – (Feeff/DF)…………………………………………………………(8)

where ΔFeDA is expressed in milligram per liter. When only an effluent flow rate was available, the chemical composition of the influent water sample was adjusted,

ΔFeDA = (Fein x DF) – Feeff…………………………………………………………………(9)

Because most of the DF values were < 1.00, the adjustment procedures generally resulted in smaller estimates of changes in contaminant concentrations than would have been calculated without the dilution adjustment.

Rates of contaminant removal, expressed as gram per square meter per day, were then calculated from the dilution-adjusted change in concentrations, the flow rate measurement liter per minute, and the SA of the system, in square meter

Fe(g · m-² · day-¹)rem = (Δ FeDA x Flow x 1.44 )/SA………………………………………….(10)

Loading Limitations

A primary purpose of this chapter is to define the contaminant removal capabilities of passive treatment systems. Accurate assessments of these capabilities require that the treatment systems studied contain excessive concentrations of the contaminants. A system that is completely effective (lowers a contaminant to <2 mg·L-¹) may provide an indication that contaminant removal occurs (if dilution is not the cause of concentration changes), but cannot provide an estimate of the capabilities of the removal processes, as the rate of contaminant removal may be limited simply by the contaminant loading rate. For example, in table 9, the removal rate of Fe for wetland C1 is 3.5 g·m-²·d-¹. This rate is not an accurate estimate of the capability of the wetland to remove Fe because the loading rate on this day was also only 3.5 g·m-²·d-¹. The data from C1 are not sufficient to estimate whether the wetland could have removed 10 or 100 g·m-²·d-¹ of Fe. Only when the wetland is overloaded with Fe (days C2 and C3), can the Fe removal capabilities of the wetland be assessed.

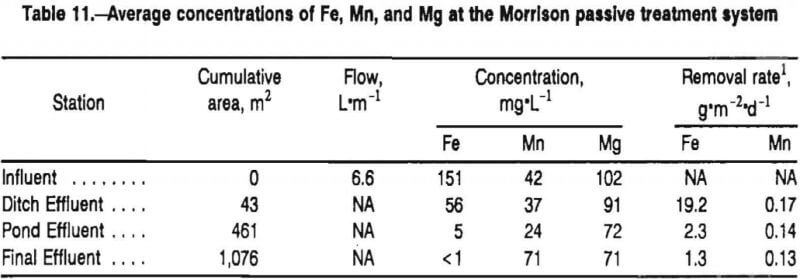

The Morrison passive treatment system demonstrates the necessity of recognizing both dilution and loading- limiting situations in the evaluation of the kinetics of metal removal processes. The Morrison system consists of an anoxic limestone drain followed by a ditch, a settling pond, and two wetland cells. Figure 5, previously presented in chapter 2, shows average concentrations of Fe, Mn, and Mg at the sampling stations. Iron loading and removal rates for the sampling stations are shown in table 11. The treatment system decreased concentrations of Fe from 151 mg·L-¹ at the system influent station (the ALD discharge) to <1 mg·L-¹ at the final wetland effluent station. Most of the change in Fe chemistry occurred in the ditch, a portion of the system that only accounted for 4% of the total treatment system SA. Calculations of the rate of Fe removal based on the entire treatment system resulted in a value of 1.3 g·m-²d-¹. Because this removal rate is equivalent to the load, it does not represent a reliable approximation of the system’s Fe-removal capability. Only when an Fe removal rate is calculated for the ditch, an area where Fe loading exceeded Fe removal, does an accurate assessment of the Fe removal capabilities result.

Concentrations of Mn at the Morrison effluent station were generally above discharge limits. Manganese was detectable in every effluent water sample (>.4 mg·L-¹) and >2 mg·L-¹ in 75% of the samples. Thus, it was reasonable to evaluate the kinetics of Mn removal based on the SA of the entire treatment system. Concentrations of Mg, however, decreased with flow through the treatment system, suggesting an important dilution component. Effluent water samples contained, on average, 31% lower concentrations of Mg than did the influent samples. On several occassions when the site was sampled in conjunction with a rainstorm, differences between effluent and influent concentrations of Mg were larger than 50%. Measurements of metal removal by the Morrision treatment system that did not attempt to account for dilution would significantly overestimate the actual kinetics of metal removal processes.

Dilution adjustments were possible for every set of water samples collected from a treatment system because concentrations of Mg were determined for every water sample. Problems with loading limitations, however, could not be corrected at every site. At two sites where complete removal of Fe occurred, the Blair and Donegal wetlands, the designs of the systems were not conducive for the establishment of intermediate sampling stations. For these two systems, no Fe removal rates were calculated because complete removal of Fe occurred over an undetermined area of treatment system.

Study Sites

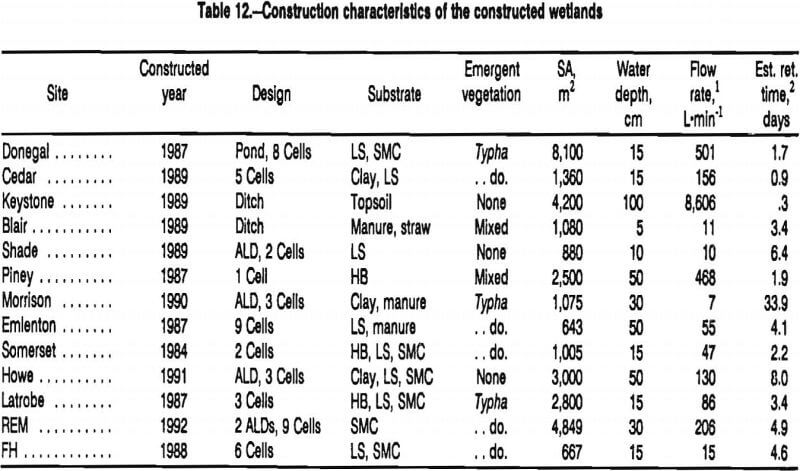

The design characteristics of the 13 passive treatment systems monitored during this study are shown in table 12.

At four of the sites, acidic mine water was pretreated with anoxic limestone drains (ALD’s) before it flowed into constructed wetlands. The construction materials for the wetlands ranged from mineral substances, such as clay and limestone rocks, to organic substances such as spent mushroom compost, manure, and hay bales. Cattails (Typha latifolia and, less commonly, T. angustifolia) were the most common emergent plants growing in the systems. Three sites contained few emergent plants. Most of the wetland systems consisted of several cells or ponds connected serially. Two systems, however, each consisted of a single long ditch.

The mean influent flow rates of mine drainage at the study sites ranged from 7 to 8,600 L·min-¹ (table 12). The highest flow rates occurred where drainage discharged from abandoned and flooded underground mines. The lowest flow rates occurred at surface mining sites. Estimated average retention times ranged from 8 h to more than 30 days.

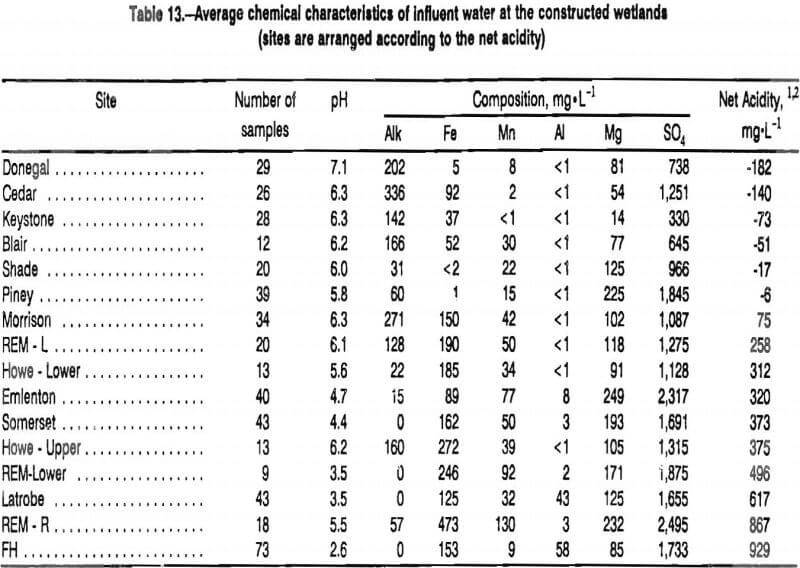

The average chemistry of the influents to the 16 constructed wetlands are shown in table 13. Data from 15 sampling points are shown. At the REM site, two discharges are treated by distinct ALD-wetland systems that eventually merge into a single flow. The combined flows are referred to as REM-Lower. Mine water at the Howe Bridge system is characterized at two locations. The “upper” analysis describes mine water discharging from an ALD that flows into aerobic settling ponds. The “lower” analysis describes the chemistry of water flowing out of the last settling pond and into a large compost-limestone wetland that is constructed so that mine water flows in a subsurface manner.

Ten of the influents to the constructed wetlands had pH >5 and concentrations of alkalinity >25 mg·L-¹. The alkaline character of Five of these discharges resulted from pretreatment of the mine water with ALD’s. The high concentrations of alkalinity contained by five discharges not pretreated with ALD’s arose from natural geochemical reactions within the mine spoil (Donegal and Blair) or the flooded deep mine (Cedar, Keystone, and Piney). For mine waters that contained appreciable alkalinity, the principal contaminants were Fe and Mn.

Concentrations of alkalinity for six of the influents were high enough to result in a net alkaline conditions (negative net acidity in table 13). A seventh alkaline influent, Morrison, was only slightly net acidic. For these seven influents, enough alkalinity existed in the mine waters to offset the mineral acidity associated with Fe oxidation and hydrolysis.

Nine of the influents were highly acidic. Five of the acidic influents contained alkalinity, but mineral acidity associated with dissolved Fe and Mn caused the solutions to be highly net acidic. These inadequately buffered waters were contaminated with Fe and Mn. Four of the waters contained no appreciable alkalinity (pH <4.5) and high concentrations of acidity. Mine waters with low pH were contaminated with Fe, Mn, and Al.

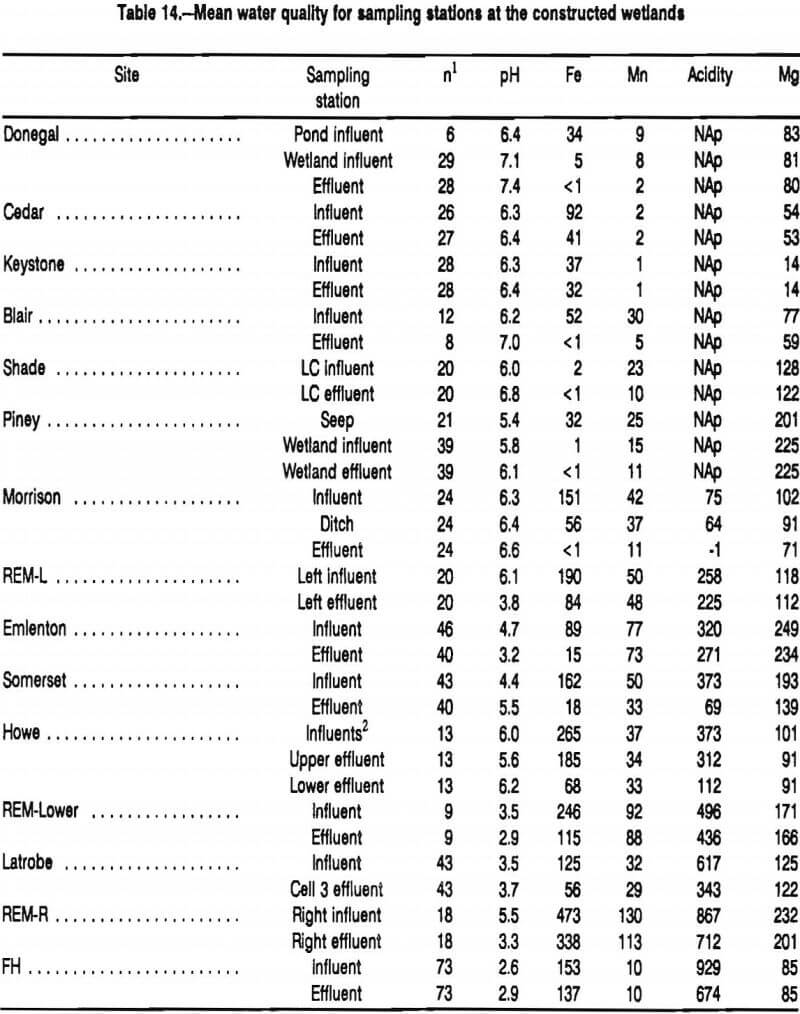

Effects of Treatment Systems on Contaminant Concentrations

The effects of the treatment systems on contaminant concentrations are shown in table 14. Every system decreased concentrations of Fe. At four sites where the original mine discharge contained elevated concentrations of Fe, the final discharges contained <1 mg·L-¹. Nine of the systems decreased Fe concentrations by more than 50 mg·L-¹. The largest change in Fe occurred at the Howe Bridge system where concentrations decreased by 197 mg·L-¹. From a compliance perspective, the most impressive decrease in Fe occurred at the Morrison system where 151 mg·L-¹ decreased to <1 mg·L-¹.

Fourteen of the passive systems received mine water contaminated with Mn. Eleven of these systems decreased concentrations of Mn. Changes in Mn were smaller than changes in Fe. The largest change in Mn concentration, 31 mg·L-¹, occurred at the Morrison site. Only the Donegal treatment system discharged water that consistently met effluent criteria for Mn (<2 mg·L-¹). Both the Shade and Blair wetland effluents flowed into settling ponds which discharged water in compliance with regulatory criteria. On occassions, the discharges of the Morrison and Piney treatment systems met compliance criteria.

Every wetland system decreased concentrations of acidity. The Morrison system, which received mine water that contained 75 mg·L-¹ acidity, always discharged net alkaline water. None of the constructed wetlands that received highly acidic water (net acidity >100 mg·L-¹) regularly discharged water with a net alkalinity. During low-flow periods, the Somerset, Latrobe, and FH systems discharged net alkaline water. The largest change in acidity occurred at the Somerset wetland where concentrations decreased by an average 304 mg·L-¹.

Dilution Factors

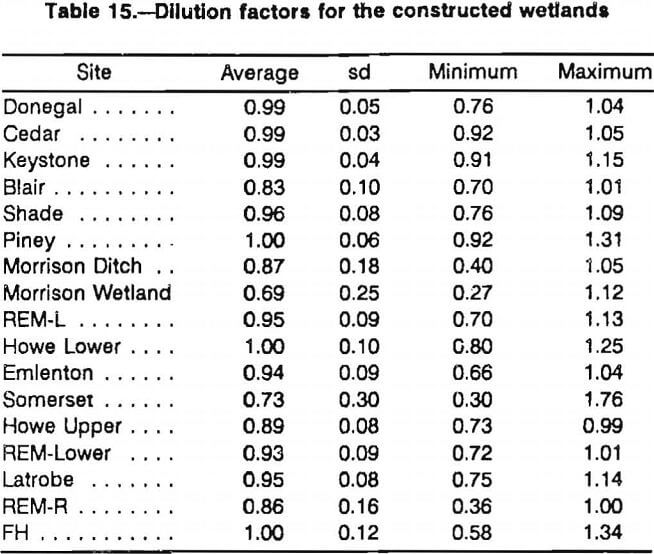

While contaminant concentrations decreased with flow through every constructed wetland, concentrations of Mg also decreased at many of the sites. Decreases in Mg indicated that part of the improvement in water quality was because of dilution. Average dilution factors for the treatment systems are shown in table 15. For 9 of the 17 systems, average dilution factors were 0.95 to 1.00 and dilution adjustments were minor. At the remaining eight systems, mean DF values were less than 0.95 and dilution adjustments averaged more than 5%. Water quality data from the Morrison and Somerset constructed wetlands were adjusted, on average, by more than 25%.

Dilution factors varied widely between sampling days. Dilution adjustments were higher for pairs of samples collected in conjuction with precipitation events or thaws. Every system was adjusted by more than 5% on at least one occasion (see minimum dilution factors in table 15). Adjustments of more than 20% occurred on at least one occasion at 13 of the 17 study sites.

Few dilution adjustments were >1.00 (see maximum dilution factors in table 15). Of the 390 dilution factors that were calculated for the entire data set, 13 exceeded 1.05. These high dilution factors could have resulted from evaporation or freezing out of uncontaminated water within the treatment system, from temporal changes in water chemistry, or from sampling errors. Most of the high dilution factors were associated with rainstorm events, suggesting temporal changes in water quality. When dilution factors were >1.00, the calculated rates of contaminant removal were greater than would have been estimated without any dilution adjustment. Because of the limited number of sample pairs with high dilution factors, their presence did not markedly affect the average contaminant removal rates for the constructed wetland study areas.

Removal of Metals from Alkaline Mine Water

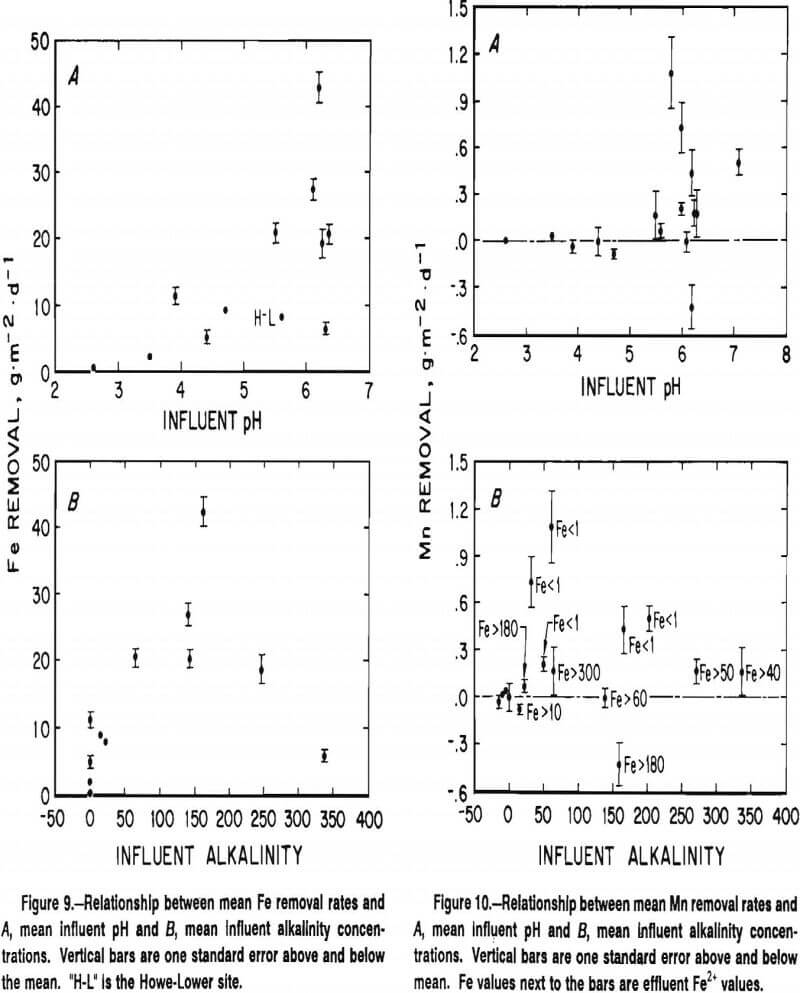

Rates of Fe and Mn removal for the study systems are shown in table 16. Significant removal of Fe occurred at every study site. Fe removal rates were directly correlated with pH and the presence of bicarbonate alkalinity (figure 9). These two water quality parameters are closely related because the buffering effect of bicarbonate alkalinity causes mine waters with >50 mg·L alkalinity to typically have a pH between 6.0 and 6.5. Within the group of sites that received alkaline mine water, there was not a significant relationship between the Fe removal rate and the concentration of alkalinity.

Removal of Fe at the alkaline mine water sites appeared to occur principally through the oxidation of ferrous iron and the precipitation of ferric hydroxide (reaction A, chapter 2). Mine water within the systems was turbid with suspended ferric hydroxides. By the cessation of the studies, each of the alkaline water sites had developed thick accumulations of iron oxyhydroxides. Laboratory experiments, discussed in chapter 2, demonstrated that abiotic ferrous iron oxidation processes are rapid in aerated alkaline mine waters. No evidence was found that microbially-mediated anaerobic Fe removal processes, which require the presence of an organic substrate, contributed significantly to Fe removal at the alkaline sites. Fe removal rates at the REM wetlands, which were constructed with fertile compost substrates, did not differ from rates at sites constructed with mineral substrates (Morrison, Howe-Upper, Keystone).

Rates of Fe removal averaged 23 g·m-²·d-¹ at the six sites that contained alkaline, Fe-contaminated water. Four of the alkaline systems displayed similar rates despite widely varying flow conditions, water chemistry and system designs. The Keystone system, a deep plant less ditch that lowered Fe concentrations in a very large deep mine discharge by 5 mg·L-¹, removed Fe at a rate of 21 g·m-²·d-¹. The shallow-water Morrison ditch, which decreased concentrations of Fe in a low-flow seep by almost 100 mg·L-¹, had an average Fe removal rate of 19 g·m-²·d-¹. The REM-L and REM-R wetlands, which were constructed almost identically, but received water with contaminant concentrations and flow rates that varied by 200%, displayed Fe removal rates of 20 and 28 g·m-²·d-¹.

Two alkaline mine water sites varied considerably from the other sites in their Fe removal capabilities. The Cedar Grove wetland removed Fe at a rate of 6 g·m²·d-¹, while the Howe Bridge Upper site removed Fe at a rate of 43 g·m²·d-¹. The Cedar Grove system consists of a series of square cells that may have more short-circuiting flow paths than the rectangular-shaped cells of the other systems. The Cedar Grove system also contains less aeration structures than the other systems. Mine water at the site upwells from a flooded underground mine into a pond that dicharges into a three-cell wetland. Limited topographic relief prevented the inclusion of structures that efficiently aerate the water (i.e., waterfalls, steps). The Howe Bridge Upper system, in contrast, very effectively aerates water. Drainage drops out of a 0.3-m-high pipe, flows down a cascading ditch and through a V-notch weir before it enters a large settling pond. Because the rate of abiotic ferrous iron oxidation is directly proportional to the concentration of dissolved oxygen, insufficient oxygen transfer may explain the low rate of Fe removal at the Cedar Site, while exceptionally good oxygen transfer at the Howe Bridge Upper site may explain its high rate of Fe removal.

At sites where the buffering capacity of bicarbonate alkalinity exceeded the mineral acidity associated with iron hydrolysis, precipitation of Fe did not result in decreased pH. This neutralization was evident at the Morrison, Cedar, Keystone, Blair, Piney, and Donegal sites (table 14). At the Howe Bridge and REM wetlands, the mine water was insufficiently buffered and iron hydrolysis eventually exhausted the alkalinity and pH fell to low levels. The effluents of both REM systems had pH <3.5. The Howe Bridge Upper system discharged marginally alkaline water (<25 mg·L-¹ alkalinity; pH 5.6). Spot checks of the pH of surface water 20 m into the Howe Bridge Lower wetland (which receives the Upper system effluent) always indicated pH values <3.5.

Significant removal of Mn only occurred at five of the constructed wetlands (table 13). Each of these sites received alkaline mine water (figure 10). Each site also either received water with low concentrations of Fe (Piney and Shade) or developed low concentrations of Fe within the treatment system (Blair, Donegal, and Morrison).

Alkaline sites that contained high concentrations of Fe throughout the treatment system (Howe-Upper, REM-L, REM-R, and Cedar), did not remove significant amounts of Mn. The Morrison ditch, which contained water with an average 56 mg·L-¹ Fe, had a significant Mn removal rate. This rate, however, was derived from an average dilution-adjusted decrease in Mn concentrations of only 1.2 mg·L-¹ or 3% of the influent concentrations. Because of uncertainities with sampling, analysis, and dilution- adjustment procedures that could reasonably bias Mn data by 2-3%, the authors do not currently place much practical confidence in this value.

The five sites that markedly decreased concentrations of Mn had variable designs. The Donegal wetland has a thick organic and limestone substrate and is densely vegetated with cattails. The Blair and Morrison wetlands contain manure substrates and are densely vegetated with emergent vegetation. The Piney wetland was not constructed with an organic substrate and includes deep open water areas and shallow vegetated areas. The Shade treatment system contains limestone rocks, no organic substrate, and few emergent plants. Thus, chemical aspects of the water, not particular design parameters, appear to principally control Mn removal in constructed wetlands.

The removal of Mn from aerobic mine waters appeared to result from oxidation and hydrolysis processes. Black Mn-rich sediments were visually abundant in the Shade, Donegal, and Blair wetlands. As discussed in chapter 2, the specific mechanism by which these oxidized Mn solids form is unclear. The amorphous nature of the solids prevented identification by standard X-ray diffraction methods. However, samples of Mn-rich solids collected from the Shade and Blair wetlands were readily dissolved by alkaline ferrous iron solutions, indicating the presence of oxidized Mn compounds.

Mn²+ can reportedly be removed from water by its sorption to charged FeOOH (ferric oxydroxide) particles. If this process is occurring at the study wetlands, it is not a significant sink for Mn removal. The bottoms of the Morrison ditch, Howe-Upper, Cedar, REM-L, and REM-R wetlands were covered with precipitated FeOOH and the mine water within these wetlands commonly contained 5 to 10 mg·L-¹ of suspended FeOOH (difference of the Fe content of unfiltered and filtered water samples). After mine water concentrations were adjusted to reflect dilution, no removal of Mn was indicated at four of the sites and very minor removal of Mn occurred at the fifth site (Morrison ditch).

Although the processes that remove Mn and Fe from alkaline mine water appears to be mechanistically similar (both involve oxidation and hydrolysis reactions), the observed kinetics of the metal removal processes are quite different. In the alkaline mine waters studied, Mn removal rates were 20 to 40 times slower than Fe removal.

The presence or absence of emergent plants in the wetlands did not have a significant effect on rates of either Fe or Mn removal at the alkaline mine water sites. In general, bioaccumulation of metals in plant biomass is an insignificant component of Fe and Mn removal in constructed wetlands. The ability of emergent plants to oxygenate sediments and the water column has been proposed as an important indirect plant function in wetlands constructed to treat polluted water. Either oxygenation of the water column is not a rate limiting aspect of metal oxidation at the constructed wetlands that received alkaline mine water, or physical oxygen transfer processes are more rapid than plant-induced processes.

Removal of Metals and Acidity from Acid Mine Drainage

Metal removal was slower at constructed wetlands that received acidic mine water than at those that received alkaline mine water. Removal of Mn did not occur at any site that received highly acidic water (figure 10). Removal of Fe occurred at every wetland that received acidic mine water, but the Fe removal rates were less than one-half those determined at alkaline wetlands (figure 9). Because abiotic ferrous iron oxidation processes are extremely slow at pH values <5, virtually all the Fe removal observed at the acidic sites must arise from direct or indirect microbial activity. Microbially-mediated Fe removal under acidic conditions is, however slower than abiotic Fe-removal processes under alkaline conditions.

Wetlands that treat acidic mine water must both precipitate metal contaminants and neutralize acidity. At most wetland sites, acidity neutralization was the slower process. At the Emlenton and REM wetlands, Fe removal processes were accompanied on every sampling occasion by an increase in proton acidity which markedly decreased pH (see figure 4A, chapter 2). Mine water pH occasion-ally decreased with flow through the Latrobe and Somerset wetlands. Thus, for the wetlands included in this study, the limiting aspect of acid mine water treatment was the generation of alkalinity or the removal of acidity (which were considered in this report to be equivalent, see chapter 2). The best measure of the effectiveness of the acid water treatment systems was through the calculation of acidity removal rates.

Acidity can be neutralized in wetlands through the alkalinity-producing processes of carbonate dissolution and bacterial sulfate reduction. As was discussed in chapter 2, the presence of an organic substrate where reduced Eh conditions develop promotes both alkalinity-generating processes. In highly reduced environments where dissolved oxygen and ferric iron are not present, carbonate surfaces are not passivated by FeOOH armoring. Decomposition of the organic substrate can result in elevated partial pressures of CO2 and promote carbonate dissolution. The presence of organic matter also promotes the activity of sulfate-reducing bacteria.

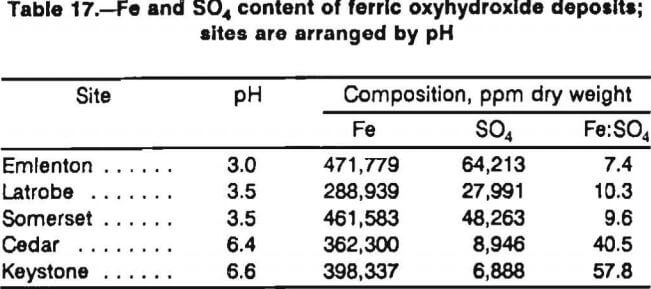

The rates of alkalinity generated from these two processes in the constructed wetlands were determined based on dilution-adjusted changes in the concentrations of dissolved Ca and sulfate, the stoichiometry of the alkalinity-generating reactions, and measured flow rates. The calculations are based on the assumption that Ca concentrations only increase because of carbonate dissolution and that sulfate concentrations only decrease because of bacterial sulfate reduction. One possible error in this approach is that sulfate can co-precipitate with ferric hydroxides in low-pH aerobic environments. The Fe and sulfate content of surface deposits collected from the constructed wetlands indicate that sulfate is incorporated into the precipitates collected from acidic environments at an average Fe:SO4 ratio of 9.7 (table 17). If all of the Fe removed from mine water is assumed to precipitate as ferric hydroxide with a Fe:SO4 ratio of 9.7:1, then changes in sulfate concentrations attributable to the co-precipitation process amount to only 5 to 30 mg·L-¹ at the acid mine water sites. Dilution-adjusted changes in sulfate concentrations at the Somerset, Latrobe, Friendship Hill (FH), and Howe-Lower wetlands were commonly 200 to 500 mg·L-¹.

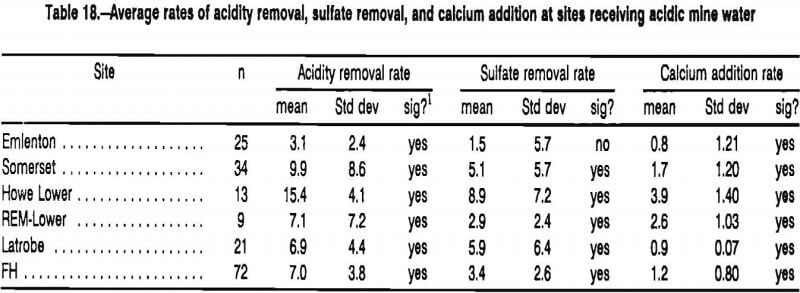

Rates of acidity removal, sulfate removal and calcium addition for six constructed wetlands that received acidic mine water are shown in table 18. Significant removal of acidity occurred at all sites. The lowest rates of acidity removal occurred at the Emlenton wetland. This site consists of cattails growing in a manure and limestone substrate. No sulfate reduction was indicated (the rate was not significantly >0). Dissolution of the limestone was indicated, but the rate was the lowest observed.

The Latrobe, Somerset, FH, Howe-Lower, and REM systems were each constructed with a spent mushroom compost and limestone substrate. Spent mushroom compost is a good substrate for microbial growth and has a high limestone content (10% dry weight). At these five wetlands, sulfate reduction and limestone dissolution both occurred at significant rates (table 18). The summed amount of alkalinity generated by sulfate reduction and limestone dissolution processes (Reactions M and N, chapter 2) correlated strongly with the measured rate of acidity removal at these four sites (r >0.90 at each site). At the FH wetland, 94% of the measured acidity removal could be explained by these two processes (figure 11).

On average, sulfate reduction and limestone dissolution contributed equally to alkalinity generation at these five sites (51% versus 49%, respectively). The average sulfate removal rate calculated for the compost sites, 5.2 g SO4-²·m²·d-¹, is equivalent to a sulfate reduction rate of -180 nmol·cm-³·d-¹. This value is consistent with measurements of sulfate reduction made at the constructed wetlands using isotope methods as well as measurements of sulfate reduction made for coastal ecosystems.

The highest rates of acidity removal, sulfate reduction, and limestone dissolution all occurred at the Howe-Lower site. This system differs from the others by its subsurface flow system. Drainage pipes, buried in the limestone that underlies the compost, cause the mine water to flow directly through the substrate. At the Somerset, Latrobe, REM, and FH systems, water flows surficially through the wetlands. Mixing of the acidic surface water and alkaline substrate waters presumably occurs by diffusion processes at the surface-flow sites. By directly contacting contaminated water and alkaline substrate, the Howe-Lower site is extracting alkalinity from the substrate at a significantly higher rate than occurs in surface flow systems. How long the Howe-Upper system can continue to generate alkalinity at the present rates is unknown. Monitoring of the system, currently in its third year of operation, is continuing.

Design and Sizing of Passive Treatment Systems

Three principal types of passive technologies currently exist for the treatment of coal mine drainage; aerobic wetland systems, wetlands that contain an organic substrate, and anoxic limestone drains. In aerobic wetland systems, oxidation reactions occur and metals precipitate primarily as oxides and hydroxides. Most aerobic wetlands contain cattails growing in a clay or spoil substrate. However, plantless systems have also been constructed and at least in the case of alkaline influent water, function sim-ilarly to those containing plants (chapter 3).

Wetlands that contain an organic substrate are similar to aerobic wetlands in form, but also contain a thick layer of organic substrate. This substrate promotes chemical and microbial processes that generate alkalinity and neutralize acidic components of mine drainage. The term “compost wetland” is often used in this report to describe any constructed wetland that contains an organic substrate in which biological alkalinity-generating processes occur. Typical substrates used in these wetlands include spent mushroom compost, Sphagnum peat, haybales, and manure.

The ALD is a buried bed of limestone that is intended to add alkalinity to the mine water. The limestone and mine water are kept anoxic so that dissolution can occur without armoring of limestone by ferric oxyhydroxides. ALD’s are only intended to generate alkalinity, and must be followed by an aerobic system in which metals are removed through oxidation and hydrolysis reactions.

Each of the three passive technologies is most appropriate for a particular type of mine water problem. Often, they are most effectively used in combination with each other. In this chapter, a model is presented that is useful in deciding whether a mine water problem is suited to passive treatment, and also, in designing effective passive treatment systems.

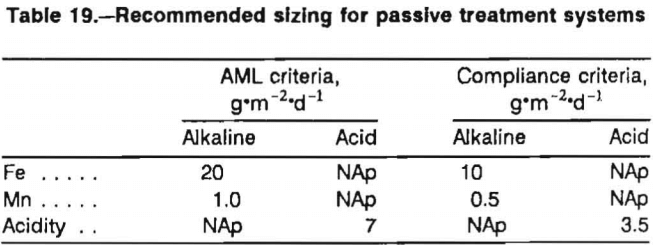

Two sets of sizing criteria are provided (table 19). The “abandoned mined land (AML) criteria” are intended for groups that are attempting to cost-effectively decrease contaminant concentrations. In many AML situations, the goal is to improve water quality, not consistently achieve a specific effluent concentration. The AML sizing criteria are based on measurements of contaminant removal by existing constructed wetlands (chapter 3). Most of the removal rates were measured for treatment systems (or parts of treatment systems) that did not consistently lower concentrations of contaminants to compliance with OSM effluent standards. In particular, the Fe sizing factor for alkaline mine water (20 g·m-²·d-¹) is based on data from six sites, only one of which lowers Fe concentrations to compliance.

It is possible that Fe removal rates are a function of Fe concentration; i.e., as concentrations get lower, the size of system necessary to remove a unit of Fe contamination (e.g., 1 g·d-¹) gets larger. To account for this possibility, a more conservative sizing value for systems where the effluent must meet regulatory guidelines was provided (table 1). These are referred to as “compliance criteria.” The sizing value for Fe, 10 g·m-²·d-¹, is in agreement with the findings of Stark for a constructed compost wetland in Ohio that receives marginally acidic water. This rate is larger, by a factor of 2, than the Fe removal rate reported by Brodie for aerobic systems in southern Appalachia that are regularly in compliance.

The Mn removal rate used for compliance, 0.5 g·m-²d-¹, is based on the performance of five treatment systems, three of which consistently lower Mn concentrations to compliance levels. A higher removal value, 1 g·m-²d-¹, is suggested for AML sites. Because the toxic effects of Mn at moderate concentrations (<50 mg·L-¹) are generally not significant, except in very soft water, and the size of wetland necessary to treat Mn-contaminated water is so large, AML sites with Fe problems should receive a higher priority than those with only Mn problems.

The acidity removal rate presented for compost wetlands is influenced by seasonal variations that cannot currently be corrected with wetland design. This is not a problem for mildly acidic water, where the wetland can be sized in accordance with winter performance, nor should it be a major problem in warmer climates. In northern Appalachia, however, no compost wetland that consistently transforms highly acidic water (>300 mg·L-¹ acidity) into alkaline water is known. One of the study sites, which receives water with an average of 600 mg·L-¹ acidity and does not need to meet a Mn standard, has discharged water that only required chemical treatment during winter months. While considerable cost savings are realized at the site because of the compost wetland, the passive system must be supported by conventional treatment during a portion of the year.

Because long-term metal-removal capabilities of passive treatment systems are currently uncertain, current Federal regulations require that the capability for chemical treatment exist at all bonded sites. This provision is usually met by placing a “polishing pond” after the passive treatment system. The design and sizing model does not currently account for such a polishing pond.

All passive treatment systems constructed at active sites need not be sized according to the compliance criteria provided in table 19. Sizing becomes a question of balancing available space and system construction costs versus influent water quality and chemical treatment costs. Mine water can be treated passively before the water enters a chemical treatment system to reduce water treatment costs or as a potential part-time alternative to full-time chemical treatment. In those cases where both passive and chemical treatment methodologies are utilized, many operators find that they recoup the cost of the passive treatment system in less than a year by using simpler, less expensive chemical treatment systems and/or by decreasing the amount of chemicals used.

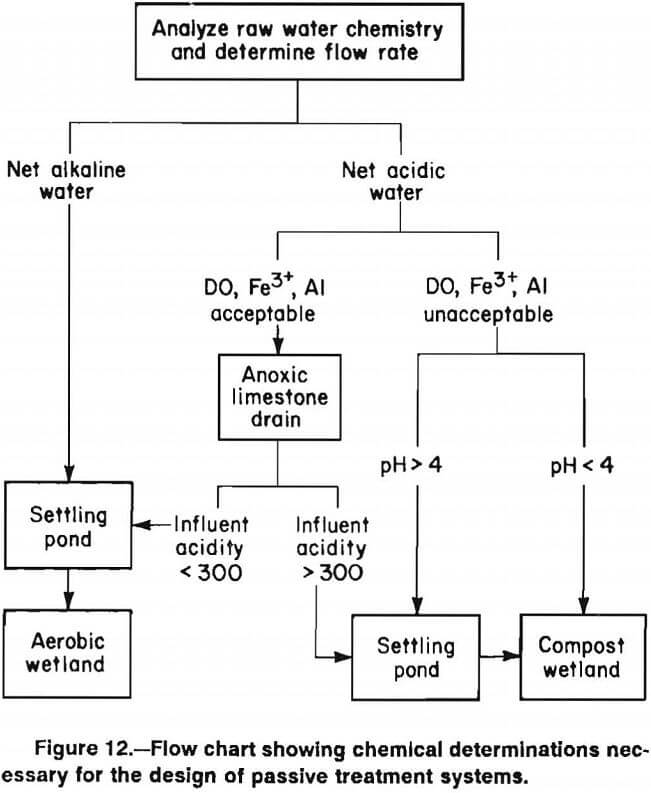

A flow chart that summarizes the design and sizing model is shown in figure 12. The model uses mine drainage chemistry to determine system design, and contaminant loadings combined with the expected removal rates in table 19 to define system size. The following text details the use of this flow chart and also discusses aspects of the model that are currently under investigation.

Characterization of Mine Drainage Discharges

To design and construct an effluent treatment system, the mine water must be characterized. An accurate measurement of the flow rate of the mine discharge or seep is required. Water samples should be collected at the discharge or seepage point for chemical analysis. Initial water analyses should include pH, alkalinity, Fe, Mn, and hot acidity (H2O2 method) measurements. If an anoxic limestone drain is being considered, the acidified sample should be analyzed for Fe³+ and Al, and a field measurement of dissolved oxygen should be made.

Both the flow rate and chemical composition of a discharge can vary seasonally and in response to storm

events. If the passive treatment system is expected to be operative during all weather conditions, then the discharge flow rates and water quality should be measured in different seasons and under representative weather conditions.

Calculations of Contaminant Loadings

The size of the passive treatment system depends on the loading rate of contaminants. Calculate contaminant (Fe, Mn, acidity) loads by multiplying contaminant concentrations by the flow rate. If the concentrations are milligrams per liter and flow rates are liters per minute, the calculation is

[Fe,Mn,Acidity] g · d-¹ = flow x [Fe,Mn,Acidity] x 1.44………………………………………(11)

If the concentrations are milligrams per liter and flow rates are gallons per minute, the calculation is

[Fe, Mn, Acidity] g · d-¹ = flow x [Fe,Mn, Acidity] x 5.45…………………………………………(12)

Calculate loadings for average data and for those days when flows and contaminant concentrations are highest.

Classification of Discharges

The design of the passive treatment system depends largely on whether the mine water is acidic or alkaline. One can classify the water by comparing concentrations of acidity and alkalinity.

Net Alkaline Water: alkalinity > acidity

Net Acidic Water: acidity > alkalinity

The successful treatment of mine waters with net acidities of 0 to 100 mg·L-¹ using aerobic wetlands has been documented in this report and elsewhere. In these systems, alkalinity either enters the treatment system with diluting water or alkalinity is generated within the system by undetermined processes. Currently, there is no method to predict which of these marginally acidic waters can be treated successfully with an aerobic system only. For waters with a net acidity >0, the incorporation of alkalinity-generating features (either an ALD or a compost wetland) is appropriate.

Passive Treatment of Net Alkaline Water

Net alkaline water contains enough alkalinity to buffer the acidity produced by meted hydrolysis reactions. The metal contaminants (Fe and Mn) will precipitate given enough time. The generation of additional alkalinity is unnecessary so incorporation of limestone or an organic substrate into the passive treatment system is also unnecessary. The goal of the treatment system is to aerate the water and promote metal oxidation processes. In many existing treatment systems where the water is net alkaline, the removal of Fe appears to be limited by dissolved O2 concentrations. Standard features that can aerate the drainage, such as waterfalls or steps, should be followed by quiescent areas. Aeration only provides enough dissolved O2 to oxidize about 50 mg·L-¹ Fe²+. Mine drainage with higher concentrations of Fe²+ will require a series of aeration structures and wetland basins. The wetland cells allow time for Fe oxidation and hydrolysis to occur and space in which the Fe floc can settle out of suspension. The entire system can be sized based on the Fe removal rates shown in table 19. For example, a system being designed to improve water quality on an AML site should be sized by the following calculation:

Minimum wetland size (m²) = Fe loading (g · d-¹) /20 (g · m-² · d-¹)………………………………(13)

If Mn removal is desired, size the system based on the Mn removal rates in table 19. Removal of Fe and Mn occurs sequentially in passive systems. If both Fe and Mn removal are necessary, add the two wetland sizes together.

A typical aerobic wetland is constructed by planting cattail rhizomes in soil or alkaline spoil obtained on-site. Some systems have been planted by simply spreading cattail seeds, with good plant growth attained after 2 years. The depth of the water in a typical aerobic system is 10 to 50 cm. Ideally, a cell should not be of uniform depth, but should include shallow and deep marsh areas and a few deep (1 to 2 m) spots. Most readily available aquatic vegetation cannot tolerate water depths greater than 50 cm.

Often, several wetland cells are connected by flow through a V-notch weir, lined railroad tie steps, or down a ditch. Spillways should be designed to pass the maximum probable flow. Spillways should consist of wide cuts in the dike with side slopes no steeper than 2H:1V, lined with nonbiodegradable erosion control fabric, and coarse rip rap if high flows are expected. Proper spillway design can preclude future maintenance costs because of erosion and/or failed dikes. If pipes are used, small diameter (<30 cm) pipes should be avoided because they can plug with litter and FeOOH deposits. Pipes should be made of polyvinyl chloride (PVC). More details on the construction of aerobic wetland systems can be found in a text by Hammer.

The geometry of the wetland site as well as flow control and water treatment considerations may dictate the use of multiple wetland cells. The intercell connections may also serve as aeration devices. If there are elevation differences between the cells, the interconnection should dissipate kinetic energy and be designed to avoid erosion and/or the mobilization of precipitates.

It is recommended that the freeboard of aerobic wetlands constructed for the removal of Fe be at least 1 m. Observations of sludge accumulation in existing wetlands suggest that a 1-m freeboard should be adequate to contain 20 to 25 years of FeOOH accumulation.

The floor of the wetland cell may be sloped up to about 3% grade. If a level cell floor is used, then the water level and flow are controlled by the downstream dam spillway and/or adjustable riser pipes.

As discussed in chapter 3, some of the aerobic systems that have been constructed to treat alkaline mine water have little emergent plant growth. Metal removal rates in these plantless, aerobic systems appears to be similar to what is observed in aerobic systems containing plants. However, plants may provide values that are not reflected in measurements of contaminant removal rates. For example, plants can facilitate the filtration of particulates, prevent flow channelization and provide wildlife benefits that are valued by regulatory and environmental groups.

Passive Treatment of Net Acid Water

Treatment of acidic mine water requires the generation of enough alkalinity to neutralize the excess acidity. Currently, there are two passive methods for generating alkalinity: construction of a compost wetland or pretreatment of acidic drainage by use of an ALD. In some cases, the combination of an ALD and a compost wetland may be necessary to treat the mine water.

ALD’s produce alkalinity at a lower cost than do compost wetlands. However, not all water is suitable for pretreatment with ALD’s. The primary chemical factors believed to limit the utility of ALD’s are the presence of ferric iron (Fe³+), aluminum (Al) and dissolved oxygen (DO). When acidic water containing any Fe³+ or Al contacts limestone, metal hydroxide particulates (FeOOH or Al(OH)3) will form. No oxygen is necessary. Ferric hydroxide can armor the limestone, limiting its further dissolution. Whether aluminum hydroxides armor limestone has not been determined. The buildup of both precipitates within the ALD can eventually decrease the drain permeability and cause plugging. The presence of dissolved oxygen in mine water will promote the oxidation of ferrous iron to ferric iron within the ALD, and thus potentially cause armoring and plugging. While the short-term performance of ALD’s that receive water containing elevated levels of Fe³+, Al, or DO can be spectacular (total removal of the metals within the ALD), the long-term performance of these ALD’s is questionable.

Mine water that contains very low concentrations of DO, Fe³+ and Al (all <1 mg·L-¹) is ideally suited for pretreatment with an ALD. As concentrations of these parameters rise above 1 mg·L-¹, the risk that the ALD will fail prematurely also increases. Recently, two ALD’s constructed to treat mine water that contained 20 mg·L-¹ Al became plugged after 6-8 months of operation.

In some cases, the suitability of mine water for pre¬treatment with an ALD can be evaluated based on the type of discharge and measurements of field pH. Mine waters that seep from spoils and flooded underground mines and have a field pH >5 characteristically have concentrations of DO, Fe³+, and Al that are all <1 mg·L-¹. Such sites are generally good candidates for pretreatment with an ALD. Mine waters that discharge from open drift mines or have pH <5 must be analyzed for Fe³+ and Al. Mine waters with pH <5 can contain dissolved Al; mine waters with pH <3.5 can contain Fe³+. In northern Appalachia, most mine drainages that have pH <3 contain high concentrations of Fe³+ and Al.

Pretreatment of Acidic Water With ALD

In an ALD, alkalinity is produced when the acidic water contacts the limestone in an anoxic, closed environment. It is important to use limestone with a high CaCO3 content because of its higher reactivity compared with a limestone with a high MgCO3 or CaMg(CO3)2 content. The limestones used in most successful ALD’s have 80% to 95% CaCO3 content. Most effective systems have used number 3 or 4 (baseball-size) limestone. Some systems constructed with limestone fines and small gravel have failed, apparently because of plugging problems. The ALD must be sealed so that inputs of atmospheric oxygen are minimized and the accumulation of CO2 within the ALD is maximized. This is usually accomplished by burying the ALD under several feet of clay. Plastic is commonly placed between the limestone and clay as an additional gas barrier. In some cases, the ALD has been completely wrapped in plastic before burial. The ALD should be designed so that the limestone is inundated with water at all times. Clay dikes within the ALD or riser pipes at the outflow of the ALD will help ensure inundation.

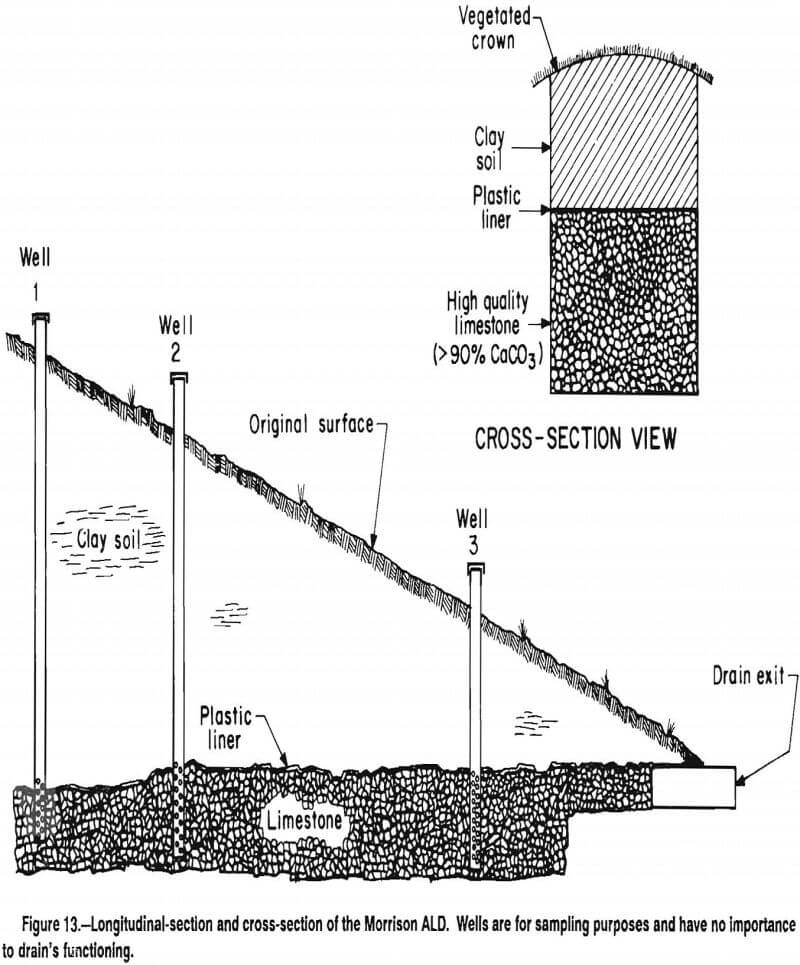

The dimensions of existing ALD’s vary considerably. Most older ALD’s were constructed as long narrow drains, approximately 0.6 to 1.0 m wide. A longitudinal section and cross section of such an ALD is shown in figure 13. The ALD shown was constructed in October 1990, and is 1 m wide, 46 m long and contains about 1 m depth of number 4 limestone. The limestone was covered with two layers of 5 mil plastic, which in turn was covered with 0.3 to 3 m of on-site clay to restore the original surface topography.

At sites where linear ALD’s are not possible, anoxic limestone beds have been constructed that are 10 to 20 m wide. These bed systems have produced alkalinity concentrations similar to those produced by the more conventional drain systems.

The mass of limestone required to neutralize a certain discharge for a specified period can be readily calculated from the mine water flow rate and assumptions about the ALD’s alkalinity-generating performance. Recent USBM research indicates that approximately 14 h of contact time between mine water and limestone in an ALD is necessary to achieve a maximum concentration of alkalinity. To achieve 14 h of contact time within an ALD, -3,000 kg of limestone rock is required for each liter per minute of mine water flow. An ALD that produces 275 mg·L-¹ of alkalinity (the maximum sustained concentration thus far observed for an ALD), dissolves -1,600 kg of limestone a decade per each liter per minute of mine water flow. To construct an ALD that contains sufficient limestone to insure a 14-h retention time throughout a 30-yr period, the limestone bed should contain -7,800 kg of limestone for each liter per minute of flow. This is equivalent to 30 tons of limestone for each gallon per minute of flow. The calculation assumes that the ALD is constructed with 90% CaCO3 limestone rock that has a porosity of 50%. The calculation also assumes that the original mine water does not contain ferric iron or aluminum. The presence of these ions would result in potential problems with armoring and plugging, as previously discussed.

Because the oldest ALD’s are only 3 to 4 yr old, it is difficult to assess how realistic these theoretical calculations are. Questions about the ability of ALD’s to maintain unchannelized flow for a prolonged period, whether 100% of the CaCO3 content of the limestone can be expected to dissolve, whether the ALD’s will collapse after significant dissolution of the limestone, and whether inputs of DO that are not generally detectable with standard field equipment (0 to 1 mg·L-¹) might eventually result in armoring of the limestone with ferric hydroxides, have not yet been addressed.

The anoxic limestone drain is one component of a passive treatment system. When the ALD operates ideally, its only effect on mine water chemistry is to raise pH to circumneutral levels and increase concentrations of calcium and alkalinity. Dissolved Fe²+ and Mn should be unaffected by flow through the ALD. The ALD must be followed by a settling basin or wetland system in which metal oxidation, hydrolysis and precipitation can occur. The type of post-ALD treatment system depends on the acidity of the mine water and the amount of alkalinity generated by the ALD. If the ALD generates enough alkalinity to transform the acid mine drainage to a net alkaline condition, then the ALD effluent can then be treated with a settling basin and an aerobic wetland. If possible, the water should be aerated as soon as it exits the ALD and directed into a settling pond. An aerobic wetland should follow the settling pond. The total post- ALD system should be sized according to the criteria provided earlier for net alkaline mine water. At this time, it appears that mine waters with acidities <150 mg’L”1 are readily treated with an ALD and aerobic wetland system.

If the mine water is contaminated with only Fe²+ and Mn, and the acidity exceeds 300 mg·L-¹, it is unlikely that an ALD constructed using current practices will dis-charge net alkaline water. When this partially neutralized water is treated aerobically, the Fe will precipitate rapidly, but the absence of sufficient bufferring can result in a discharge with low pH. Building a second ALD, to recharge the mine water with additional alkalinity after it flows out of the aerobic system, is currently not feasible because of the high DO content of water flowing out of aerobic systems. If the treatment goal is to neutralize all of the acidity passively, then a compost wetland should be built so that additional alkalinity can be generated. Such a treatment system thus contains all three passive technologies. The mine water flows through an ALD, into a settling pond and an aerobic system, and then into a compost wetland.

If the mine water is contaminated with ferric iron (Fe³+) or Al, higher concentrations of acidity can be treated with an ALD than when the water is contaminated with only Fe²+ and Mn. This enhanced performance results from a decrease in mineral acidity because of the hydrolysis and precipitation of Fe³+ and Al within the ALD. These metal-removing reactions decrease the mineral acidity of the water. ALD’s constructed to treat mine water contaminated with Fe³+ and Al and having acidities greater than 1,000 mg·L-¹ have discharged net alkaline water. The long-term prognosis for these metal-retaining systems has been questioned. However, even if calculations of system longevity (as described above) are inaccurate for waters contaminated with Fe³+, and Al, their treatment with an ALD may turn out to be cost-effective when compared with chemical alternatives.

When a mine water is contaminated with Fe²+ and Mn and has an acidity betweem 150 and 300 mg·L-¹, the ability of an ALD to discharge net alkaline water will depend on the concentration of alkalinity produced by the limestone system. The amount of alkalinity generated by a properly constructed and sized ALD is dependent on chemical characteristics of the acid mine water. An experimental method has been developed that results in an accurate assessment of the amount of alkalinity that will be generated when a particular mine water contacts a particular limestone. The method involves the anoxic incubation of the mine water in a container filled with limestone gravel. In experiments at two sites, the concentration of alkalinity that developed in these containers after 48 h correlated well with the concentrations of alkalinity measured in the ALD effluents at both sites.

https://www.911metallurgist.com/passive-mine-drainage-treatment/

Treating Mine Water With Compost Wetland

When mine water contains DO, Fe³+ or Al, or contains concentrations of acidity >300 mg·L-¹, construction of a compost wetland is recommended. Compost wetlands generate alkalinity through a combination of bacterial activity and limestone dissolution. The desired sulfate reducing bacteria require a rich organic substrate in which anoxic conditions will develop. Limestone dissolution also occurs readily within this anoxic environment. A substance commonly used in these wetlands is spent mushroom compost, a substrate that is readily available in western Pennsylvania. However, any well-composted equivalent should serve as a good bacterial substrate. Spent mushroom compost has a high CaCO3 content (about 10% dry weight), but mixing in more limestone may increase the alkalinity generated by CaCO3 dissolution. Compost substrates that do not have a high CaCO3 content should be supplemented with limestone. The compost depth used in most wetlands is 30 to 45 cm. Typically, a metric ton of compost will cover about 3.5 m² to a depth of 45 cm thick. This is equivalent to one ton per 3.5 yd². Cattails or other emergent vegetation are planted in the substrate to stabilize it and to provide additional organic matter to “fuel” the sulfate reduction process. As a practical tip, cattail plant-rhizomes should be planted well into the substrate prior to flooding the wetland cell.

Compost wetlands in which water flows on the surface of the compost remove acidity (e.g., generate alkalinity) at rates of approximately 2-12 g·m-²·d-¹. This range in performance is largely a result of seasonal variation: lower rates of acidity removal occur in winter than in summer. Research in progress indicates that supplementing the compost with limestone and incorporating system designs that cause most of the water to flow through the compost (as opposed to on the surface) may result in higher rates of limestone dissolution and better winter performance.

Compost wetlands should be sized based on the removal rates in table 19. For an AML site, the calculation is

Minimum Wetland Size (m²) = Acidity Loading (g · d-¹ /7)…………………………………………..(14)

In many wetland systems, the compost cells are preceded with a single aerobic pond in which Fe oxidation and precipitation occur. This feature is useful where the influent to the wetland is of circumneutral pH (either naturally or because of pretreatment with an ALD), and rapid, significant removal of Fe is expected as soon as the mine water is aerated. Aerobic ponds are not useful when the water entering the wetland system has a pH <4. At such low pH, Fe oxidation and precipitation reactions are quite slow and significant removal of Fe in the aerobic pond would not be expected.

Operation and Maintenance

Operational problems with passive treatment systems can be attributed to inadequate design, unrealistic expectations, pests, inadequate construction methods, or natural problems. If properly designed and constructed, a passive treatment system can be operated with a minimum amount of attention and money.

Probably the most common maintenance problem is dike and spillway stability. Reworking slopes, rebuilding spillways, and increasing freeboard can all be avoided by proper design and construction using existing guidelines for such construction.

Pests can plague wetlands with operational problems. Muskrats will burrow into dikes, causing leakage and potentially catastrophic failure problems, and will uproot significant amounts of cattails and other aquatic vegetation. Muskrats can be discouraged by lining dike inslopes with chainlink fence or riprap to prevent burrowing. Beavers cause water level disruptions because of damming and also seriously damage vegetation. They are very difficult to control once established. Small diameter pipes traversing wide spillways (“three-log structure”) and trapping have had limited success in beaver control. Large pipes with 90° elbows on the upstream end have been used as discharge structures in beaver-prone areas. Otherwise, shallow ponds with dikes with shallow slopes toward wide, riprapped spillways may be the best design for a beaver-infested system.

Mosquitos can be a problem where mine water is alkaline. In southern Appalachia, mosquitofish (Gambusia affinis) have been introduced into alkaline-water wetlands. Other insects, such as the armyworm, have devastated monocultural wetlands with their appetite for cattails. The use of a variety of plants in a system will minimize such problems.

https://www.911metallurgist.com/passive-acid-mine-drainage-water-treatment/